A DNS failure inside Amazon’s US-EAST-1 region briefly fractured the backbone of the modern internet — exposing how much of the world runs through a few square miles of Northern Virginia.

A Local Fault with Global Reach

Just after 3 a.m. ET Monday, Amazon Web Services (AWS) suffered a major outage in its US-EAST-1 region, located in Northern Virginia.

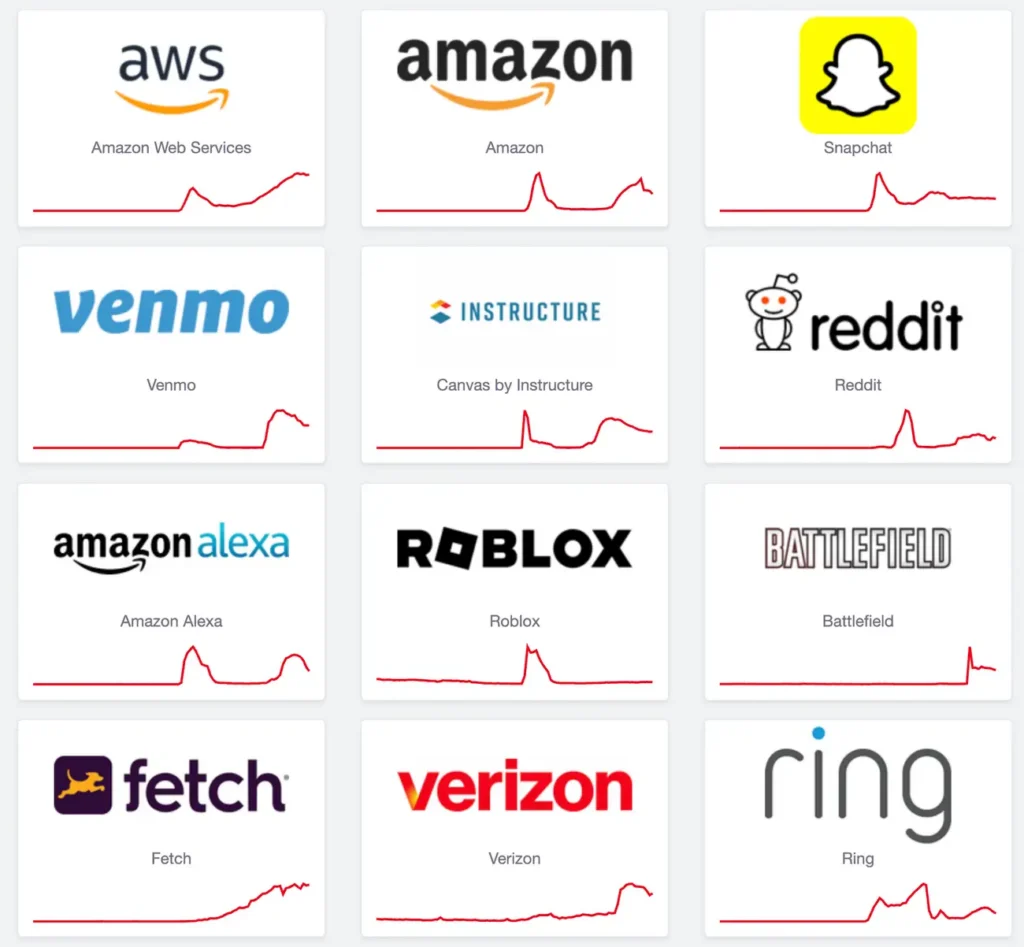

Within minutes, applications and platforms across the world — from Reddit, Perplexity AI, and Venmo to airlines, telecom providers, and workplace apps — began reporting failures.

By mid-morning, more than 100 AWS services were degraded, including DynamoDB, Redshift, and Config.

At its peak, the incident caused significant API and DNS resolution errors, disrupting critical routing between AWS’s own internal networks and the wider internet.

Although Amazon announced that all 142 impacted services had “returned to normal operations” by 6:53 p.m. ET, the waves of downtime demonstrated how dependent global connectivity has become on a handful of hyperscale regions.

The DNS Factor

Amazon confirmed that the core failure involved a network load-balancer subsystem and DNS translation, which maps domain names to IP addresses.

When DNS propagation falters inside a cloud provider’s internal network, dependent services can’t resolve IPs — effectively making healthy servers unreachable.

This is not a simple web-app failure; it’s an issue at the routing and resolution layer that underpins nearly all cloud traffic.

Such DNS-centric disruptions create a multiplier effect: authentication, database, and API services all begin to cascade, leading to timeouts far beyond the initial fault zone.

How Virginia Became the Internet’s Choke Point

The US-EAST-1 cluster in Loudoun County, Virginia — part of the area nicknamed Data Center Alley — is the largest AWS region in the world.

Virginia hosts 663 data centers, more than any other state, out of roughly 4,000 across the U.S.

“If you’re going to use AWS, you’re going to use US-EAST-1 regardless of where you are on planet Earth. We have an incredible concentration of IT services hosted out of one region by one cloud provider, and that presents a fragility for modern society.”

– Doug Madory, Director of Internet Analysis at Kentik.

The convenience of centralization has a cost: it compresses redundancy. When one region becomes the default endpoint for global workloads, the BGP routing tables, DNS entries, and object storage calls all converge there — forming a digital choke point.

A Chain Reaction Across Dependent Networks

As the outage unfolded, monitoring platforms such as DownDetector showed outages across Amazon, Venmo, Zoom, Strava, and even telecom providers like AT&T and Verizon.

These network operators weren’t technically down — but many of their customers couldn’t reach AWS-hosted endpoints.

United Airlines reported temporary downtime on its booking systems; Robinhood, Snapchat, and Perplexity AI all confirmed interruptions directly tied to AWS.

Each of these failures illustrates how IP-layer dependency on a few upstream providers translates to real-world business risk.

Lessons for Network Engineers

For organizations relying on AWS, Google Cloud, or Azure, Monday’s outage highlights several critical best practices:

- Use Multi-Region DNS and Multi-Cloud Failover: Even a simple secondary zone in another region can preserve uptime for DNS-driven services.

- Diversify BGP Announcements: Route critical prefixes through multiple carriers or IXPs to mitigate dependency on one upstream region.

- Monitor Resolver Health, Not Just Endpoint Latency: Many outages manifest first in resolver timeout metrics long before full service loss.

- Design with Independent Identity Systems: Authentication and user session tokens tied to a single cloud region can lock users out even if the app itself is running elsewhere.

Resilience Beyond Convenience

According to RIPE NCC’s Hisham Ibrahim, centralization is a human, not technical, choice.

“Companies providing online services often decide to use large cloud platforms rather than building and maintaining their own infrastructure. Outages like this ripple outward when critical components such as databases or authentication systems are hosted in one environment.”

The global internet — 76,000 interconnected autonomous systems — didn’t collapse; it simply revealed where the bottlenecks are.

The infrastructure held, but our dependency model did not.

Preparing for the Next Outage

Last year’s CrowdStrike software update caused global service interruptions across airlines and hospitals, underscoring that digital resilience depends as much on architecture as on software quality.

From 2019 through 2025, every major outage — Cloudflare, Fastly, Facebook, and now AWS — shares the same root cause: centralized infrastructure without sufficient regional independence.

For IPv4 asset managers, network operators, and cloud architects, the message is clear:

- Redundancy is not optional.

- DNS, routing, and IP allocation strategies must be treated as part of business continuity — not merely engineering hygiene.

More on IT and Networking News

- AWS BYOIP Price Increase 2024

- Fiber Dominates Virginia’s $613M Broadband Allocation

- West Virginia $624.7 Million Rural Broadband Expansions

Leave a Reply